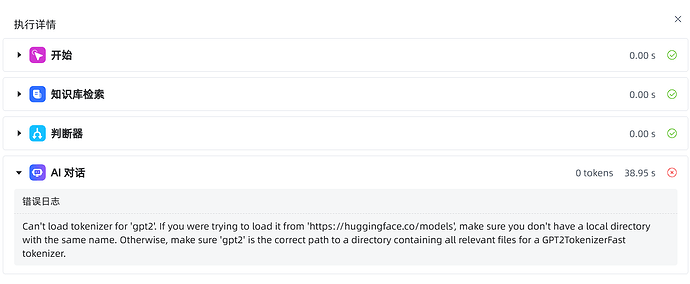

需要看一下你的本地模型对接配置,看起来路径不对。

提示的意思是“如果你要在线下载这个模型,就确保路径下没有这个模型的同名目录,如果你要使用本地模型,就确保这个路径是对的”。

还有就是我用建议编排创建应用就不报这个错误,用高级编排创建应用才会报错,不知道哪里出问题了。

问题解决了嘛?

我也遇到了这个问题,希望能对你有所帮助

class TokenizerManage:

tokenizer = None

@staticmethod

def get_tokenizer():

from transformers import GPT2TokenizerFast

if TokenizerManage.tokenizer is None:

pretrained_model_name_or_path, cache_dir = CONFIG.get_gpt2_tokenizer_setting().values()

TokenizerManage.tokenizer = GPT2TokenizerFast.from_pretrained(

pretrained_model_name_or_path,

cache_dir=cache_dir,

local_files_only=True,

resume_download=False,

force_download=False)

return TokenizerManage.tokenizer

def get_gpt2_tokenizer_setting(self) -> dict:

return {

"GPT2_TOKENIZER_MODEL_NAME": self.get('GPT2_TOKENIZER_MODEL_NAME'),

"GPT2_TOKENIZER_MODEL_PATH": self.get('GPT2_TOKENIZER_MODEL_PATH')

}

路径配置

# GPT2模型配置

GPT2_TOKENIZER_MODEL_PATH: /opt/maxkb/app/models/tokenizer

GPT2_TOKENIZER_MODEL_NAME: /opt/maxkb/app/models/tokenizer/gpt2

这是我做的改造,如果为了省事,也可以直接修改下面的代码

class TokenizerManage:

tokenizer = None

@staticmethod

def get_tokenizer():

from transformers import GPT2TokenizerFast

if TokenizerManage.tokenizer is None:

TokenizerManage.tokenizer = GPT2TokenizerFast.from_pretrained(

'gpt2',

cache_dir="/opt/maxkb/model/tokenizer",

local_files_only=True,

resume_download=False,

force_download=False)

return TokenizerManage.tokenizer

将参数带上全路径就行,即

/opt/maxkb/app/models/tokenizer

/opt/maxkb/app/models/tokenizer/gpt2